Comment and Investigation in RAID Performance

RAID 5 vs RAID10 has been discussed for ages; its common knowledge that RAID10 offers better performance – but how much depends on the actual implementation, hardware and use-case.

I just got a Server with 4 x 16TB of disks, all brand new, and decided to give it a test to find out if the performance gains of raid 10 justify the smaller usable disk space. We plan to use it as a Backup-Server so our workload is mostly sequential write.

Testing Methodology

All tests are executed using fio with iodepth=32 direct=1 ioengine=libaio refill_buffers and a time limit of 60 seconds. the sequential read and write tests are done with 1024k block size and 1 process. The random read/write tests with 4k block size and 4 processes. For testing, a 30GB partition on the start of each disk is used. The Linux kernel version is 5.10.

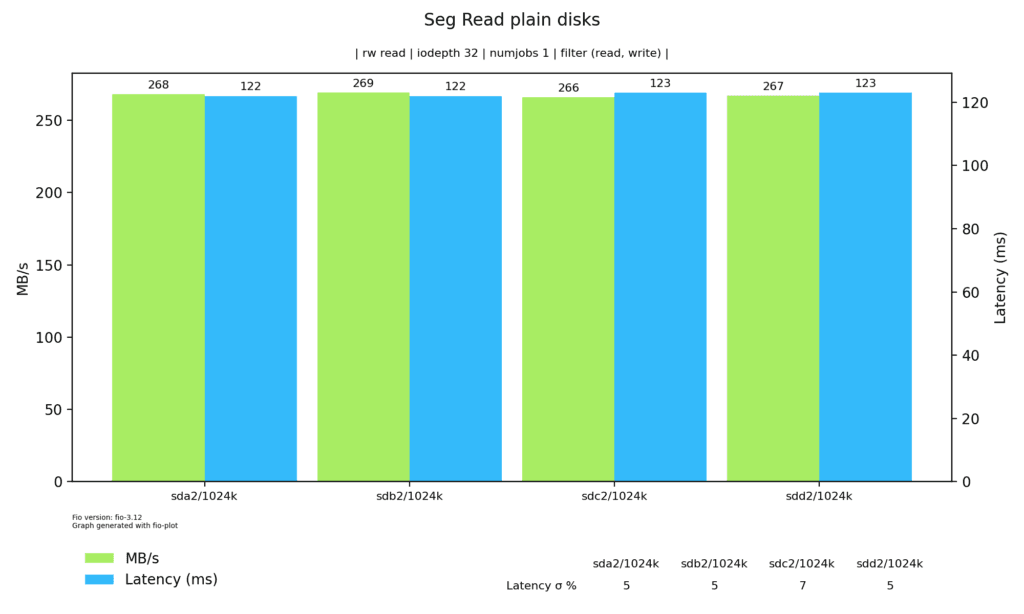

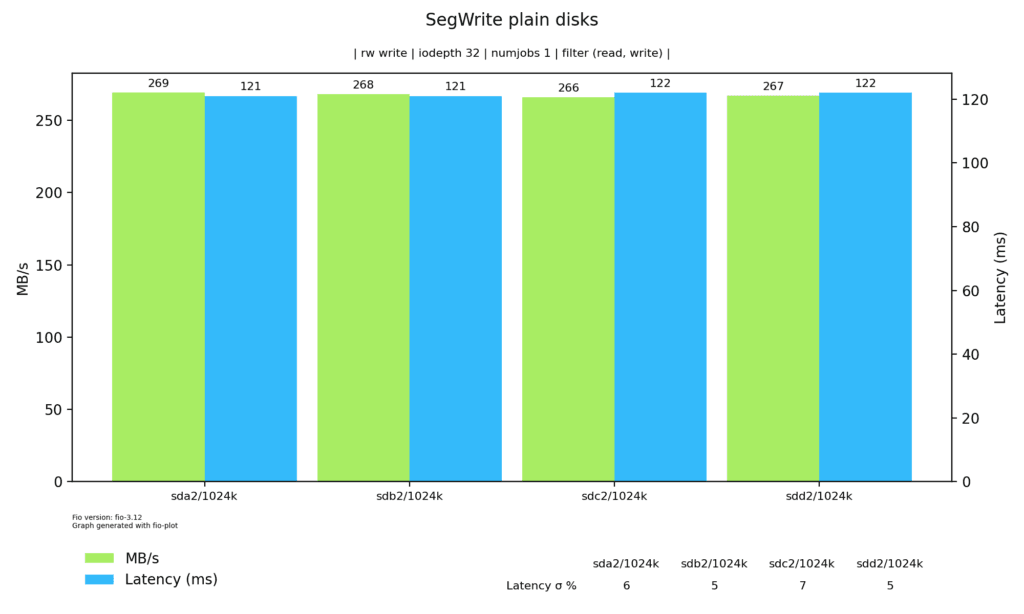

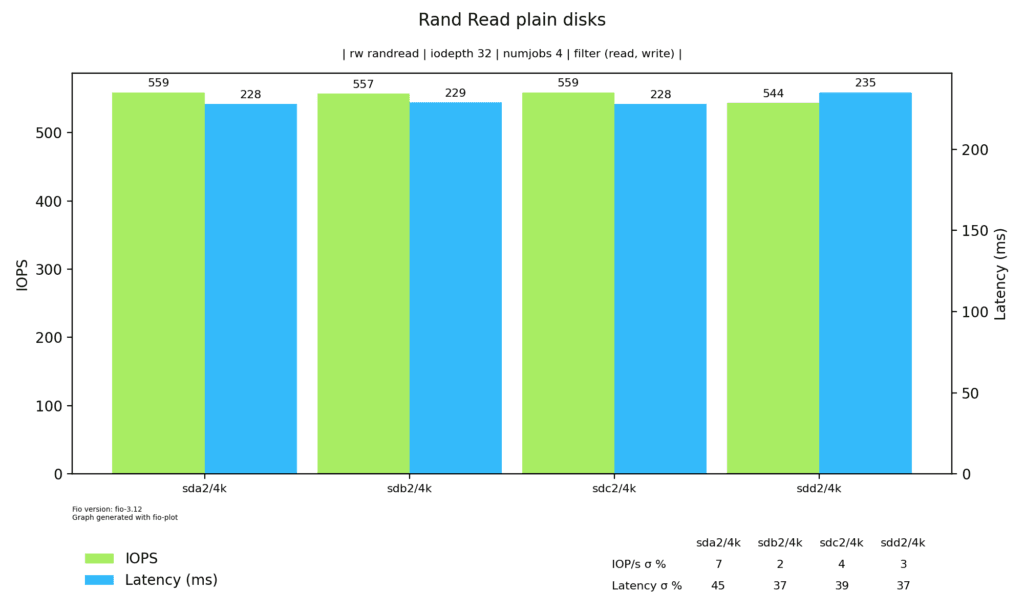

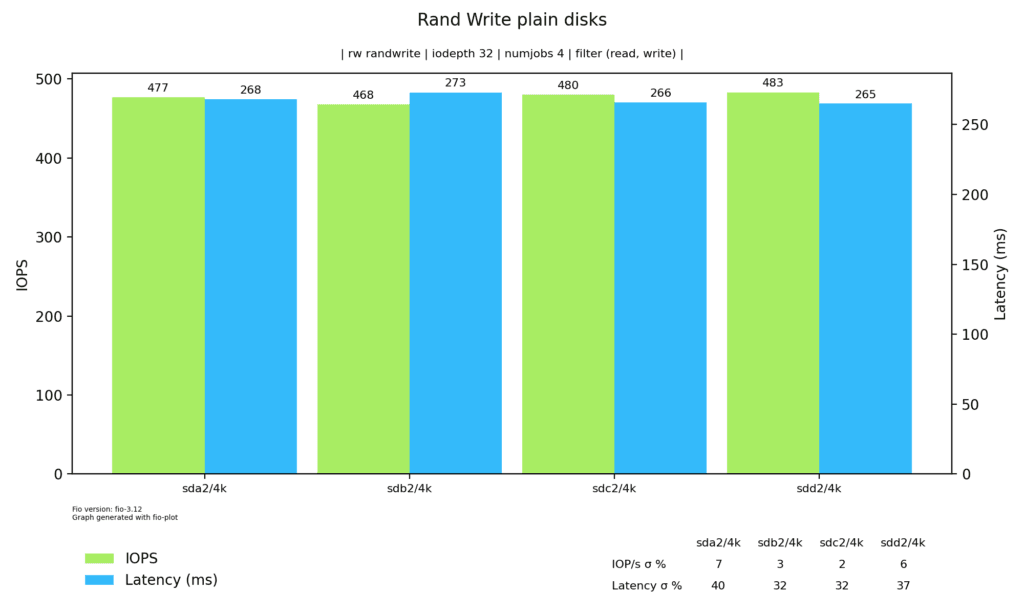

Plain Disk performance

Before I get to the actual testing, I want to get the baseline of a single disks performance and also make sure that all disks are performing similar.

Everything looks nice a flat here as it should. We have around 268MB/s sequential read and write and a random IOPS performance of 550 read / 480 write.

Raid 5 vs Raid 10

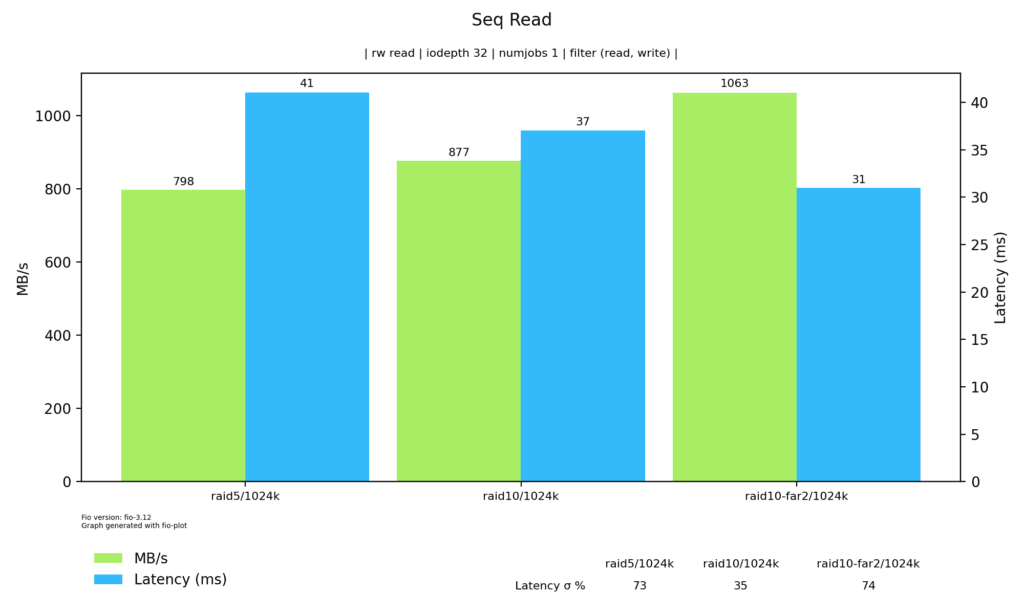

The Seq. Reading performance between RAID5 and RAID10 is smaller than i would have guessed. The far2 layout really makes a difference here.

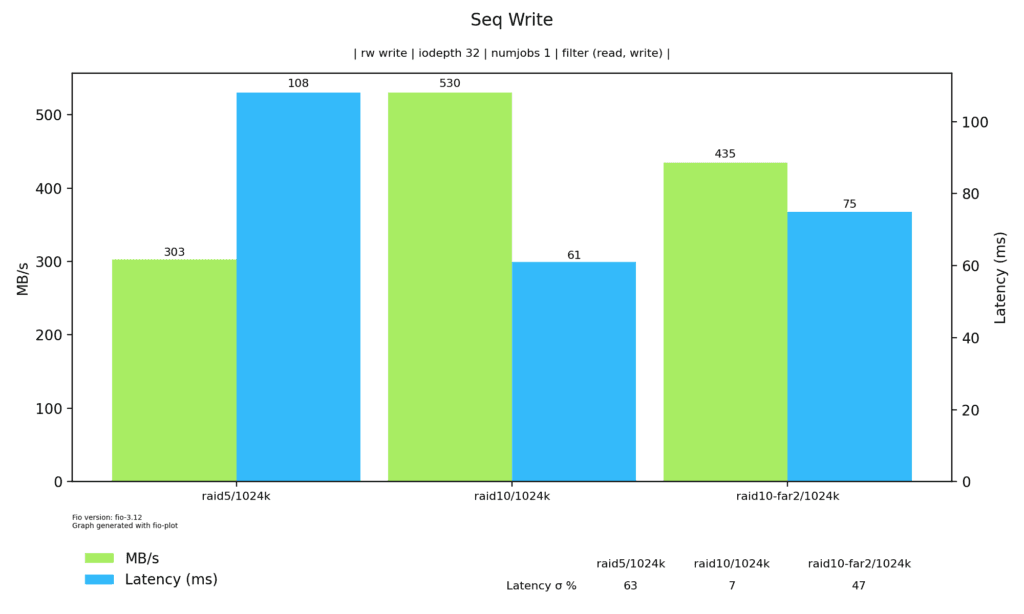

With Seq. writing, we see real differences. The „Classic“ Raid10 shows the best write speed, while the far2 and raid5 are each a step-down.

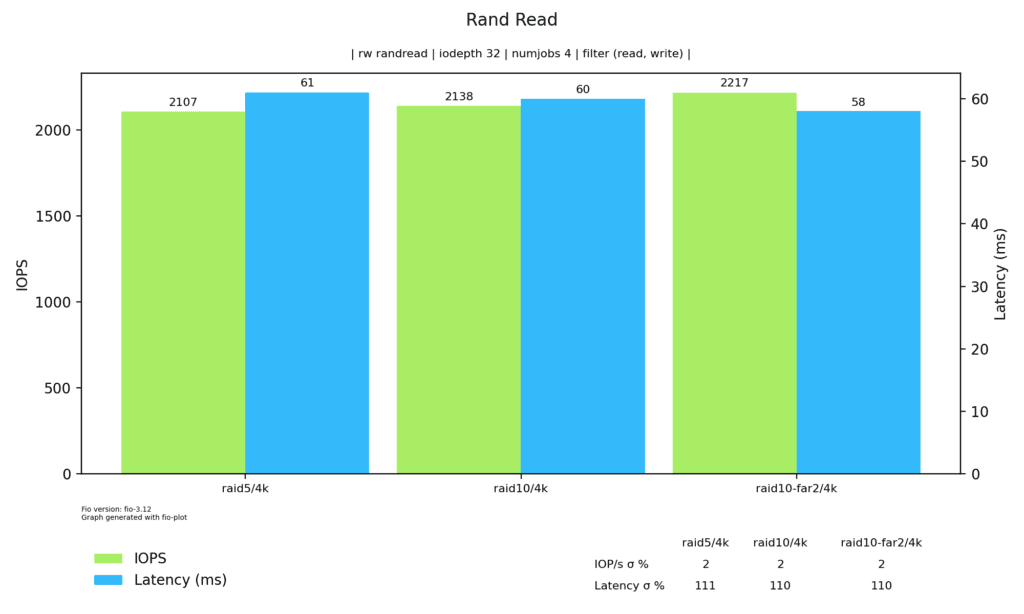

Interestingly, there is not much difference on the random read test, which i would not have expected.

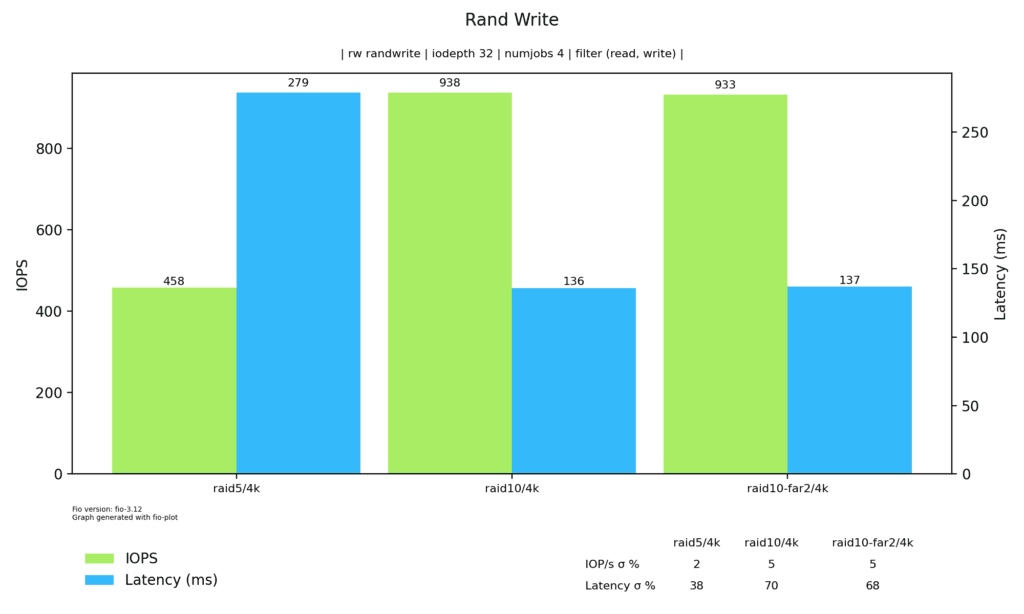

The Rand Write graph looks very similar to the Seq Write graph. Only difference is that the classic raid10 can handle random writes better than sequential ones.

MDADM has multiple implementations on how the raid works internally. For raid10 i tested the „near 2“ and „far 2“ layouts, where „near 2“ is the default. From the docs:

The advantage of this (… far 2) layout is that MD can easily spread sequential reads over the devices, making them similar to RAID0 in terms of speed. The cost is more seeking for writes, making them substantially slower.

Yes, this can be seen on the benchmark graphs

Conclusions

From my tests, it looks like the reading performance difference is neglect. On Writing, RAID10 has a huge advantage.

For our Backup-Server, we go with Raid 5, because it is 3x faster than the 1GBit network card of the server and won't bottleneck our backup- or restore processes.

If you have a more critical use case, i advice to do your own benchmarks that reflect your workload better. Especially if you are using SSDs, the results can be drastically different.